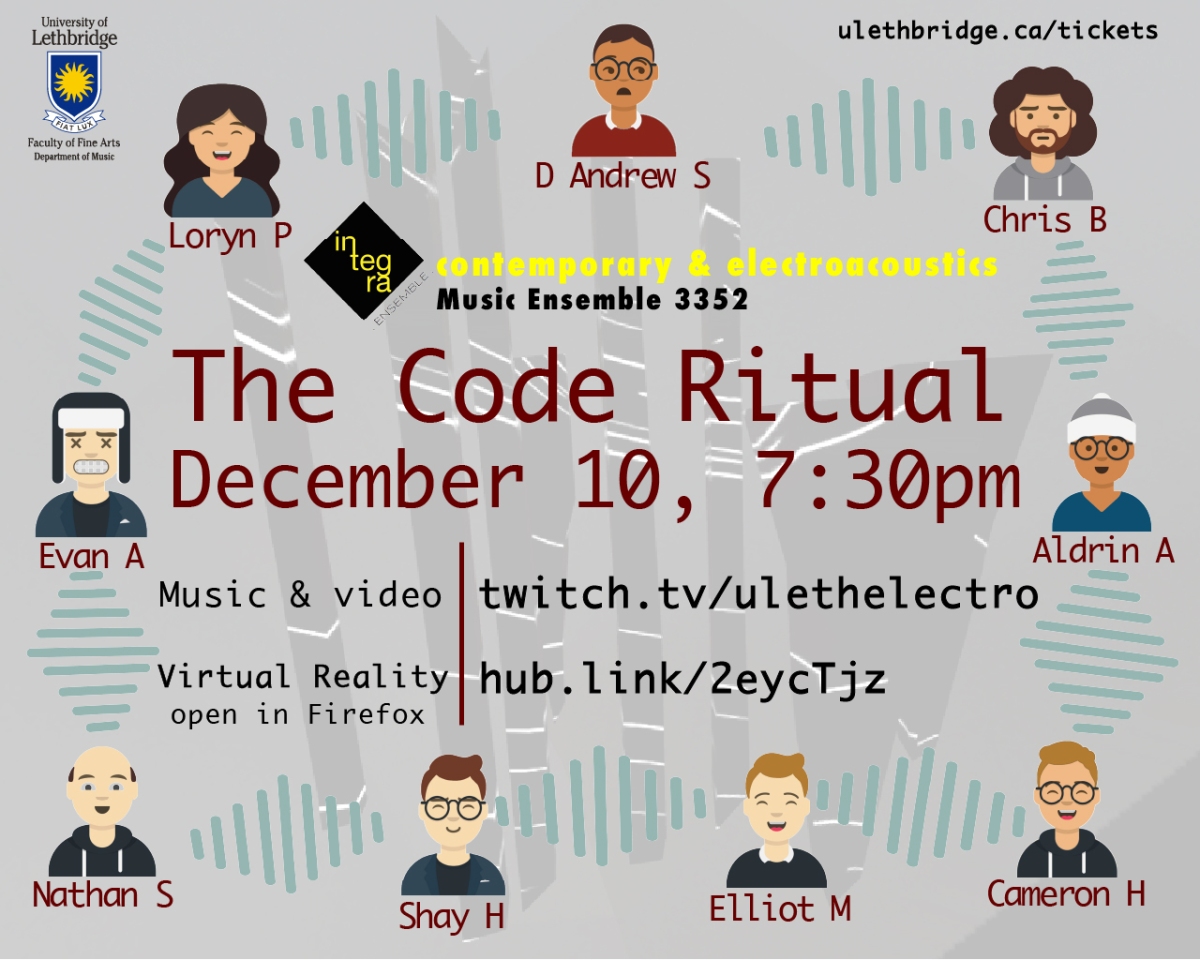

The Code Ritual

Thursday, 10 December, 2020, 7:30PM UTC-7 (MST)

On Twitch twitch.tv/ulethelectro

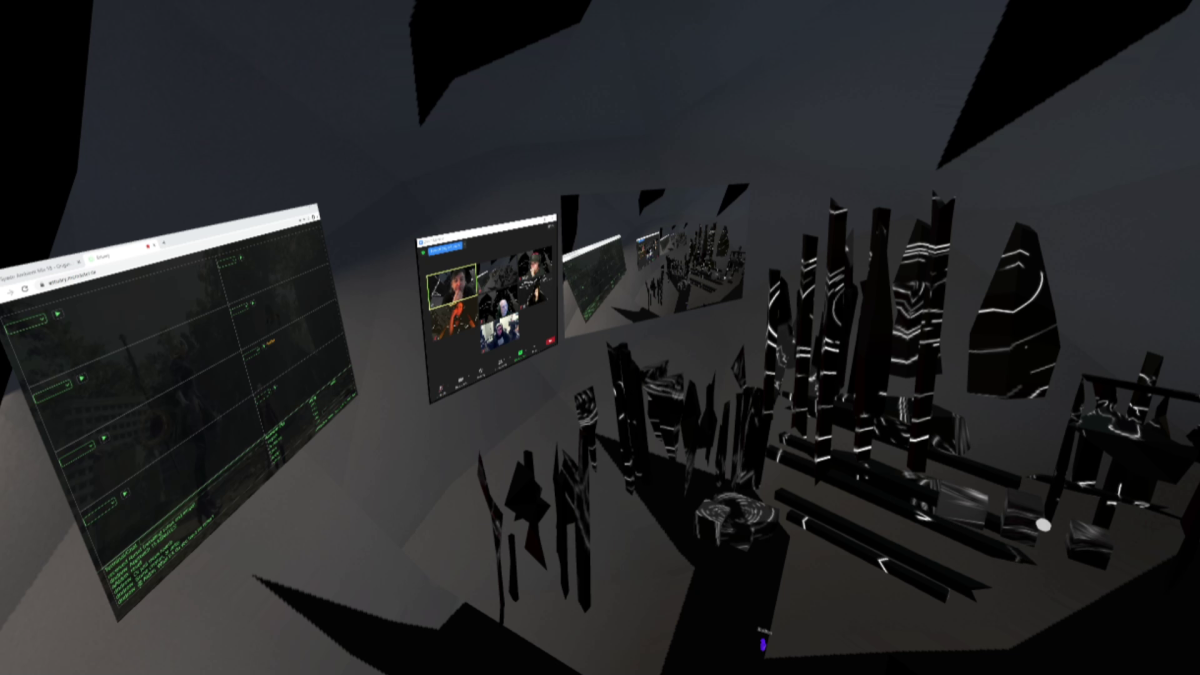

In Hubs hub.link/2eycTjz

| Make Sound! |

everyone |

| Rocket Man |

green ensemble |

| Roulette |

collaborative code |

| Creation |

blue ensemble |

| The Code Ritual |

timed code |

Rocket Man

Rocket man is a piece in 3 parts. The first part is the rocket readying for take-off and initiating count down. It is a sequential and rhythmic section based around an audio sound bite that starts off the piece. As the A section continues the complexity of the rhythm increases as the rocket takes off into space. The B section is lyrical, with the main melody based on a Hungarian minor scale. The rocket has reached orbit and is free falling constantly in space. Finally, the A section returns as before. However, as the end of the piece comes closer, the speed picks up and there is an increase in loudness. Slowly the rhythm devolves. The rocket is slowly failing and falling out of orbit. The piece ends suddenly, and the rhythm slows down until no sound is left, the rocket has failed and is now floating in the vacuum of space.

Roulette

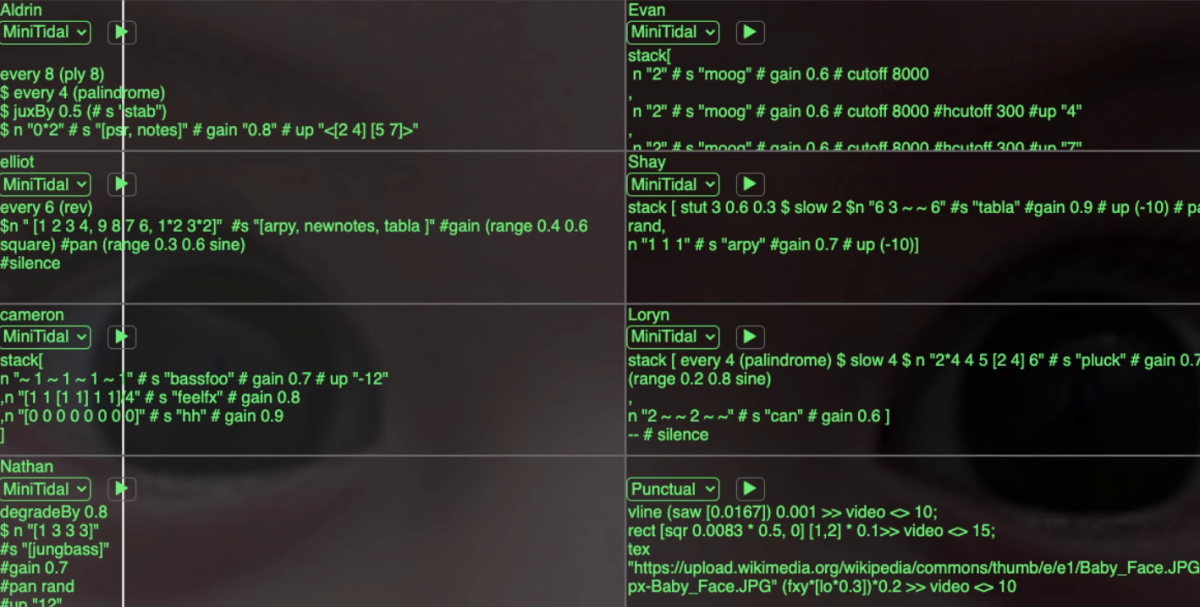

In this performance, the ensemble is split into two teams. Each performer adds their name to the queue on their teams coding box and when it’s their turn they have complete control of the sound! The goal is to create a collaborative coding experience where team mates play off of each other’s strengths while developing a composite musical voice.

Creation

Creation is a meditative piece based on the Cree Creation story, beginning with a heartbeat. The sound of the fire emerges, and the improvisation begins. There is a curious unrest midway with a faster tempo, more frantic melodic movement, and increased tension before the inevitable return to familiarity. The piece settles back to the heartbeat to conclude. Throughout the piece, the sound of rain accompanies the narration.

The Code Ritual

The Code Ritual requires ensemble members to work as a unit, creating a cohesive musical structure. While all members are coding at the same time, rarely is the entire ensemble simultaneously producing sound. Instead, The Code Ritual results in variations of quartets, trios, and duos. Through a system of visual signals created by Punctual (a programming language for generative music and visuals), members’ sounds are cued in during a period of 30 to 60 seconds – each person has 30 to 60 seconds to alter or add to the fluid musical texture. The trick here is to try to write code that can be interpreted and built upon by other members, who are standing by to take over the musical journey.

The band

Evan Alexander

Aldrin Azucena

Chris Bernhardt

Cameron Hughes

Shay Hunter

Elliot Middleton

Loryn Plante

Nathan Stewart

Patrick Davis, Hubs support

D. Andrew Stewart, director

Acknowledgements

Integra Contemporary & Electroacoustics wants to acknowledge the invaluable support of the following people and entities, whose visual and musical networking ingenuity and expertise have found a home with the ICE ensemble.

Estuary: David Ogborn and the Estuary development team at the Networked Imagination Laboratory (NIL) at McMaster University.

Tidal Cycles: Thank you to Alex McLean, and numerous active developers, for creating, building and maintaining this algorithmic composition environment.

Graphic design by coder, sound artist and improviser Michał Seta.